【报名】来TF58了解视觉基础模型研究最新进展

本期会议邀请到百度、微软、谷歌、UCSD、粤港澳大湾区数字经济研究院(IDEA)等企业和高校的代表,分享视觉基础模型研究进展,包括自监督预训练、图文预训练、多模态预训练等,探讨视觉基础模型应用落地的现状及挑战等。欢迎报名参与互动,5月17日上午9点30分线上开启。

为技术团队提供顶级交流平台

CCF TF第58期

主题 视觉基础模型研究及应用

2022年5月17日 9:30-11:30

腾讯会议

长按识别或扫码报名

报名链接:https://conf.ccf.org.cn/TF58

视觉基础模型,是最近计算机视觉研究领域和应用领域里的热门话题。从大规模数据,如无标注数据、图文数据、或者多模态数据等,预训练得到的视觉基础模型,提升了许多视觉下游任务、以及某些垂直场景上(如OCR等)性能。然而,视觉基础模型特别是大模型的研究,方兴未艾,如何实际应用场景中产生更大的价值,值得我们进一步探索。

会议安排

TF58:视觉基础模型研究及应用 主持人:何中军 CCF TF算法与AI SIG主席,百度人工智能技术委员会主席 王井东 百度计算机视觉首席科学家 | ||

时间 | 主题 | 讲者 |

9:30-9:35 | 活动介绍及开场致辞 | 何中军 |

9:35-9:55 | Context Autoencoder for Scalable Self-Supervised Representation Pretraining | 王井东 百度计算机视觉首席科学家 |

9:55-10:15 | Florence: A New Foundation Model for Computer Vision | 肖斌 微软Cloud & AI计算机视觉研究组高级研究员 |

10:15-10:35 | Label-Efficient Visual Perception via Multimodal Supervision and Distillation | 崔崟 Senior Research Scientist at Google |

10:35-11:25 | Panel Discussion:A roadmap of vision foundation model | 王井东, 肖斌, 崔崟, 张磊,屠卓文 |

11:25-11:30 | 活动总结 | 何中军 |

所属SIG

CCF TF算法与AI

会议主席

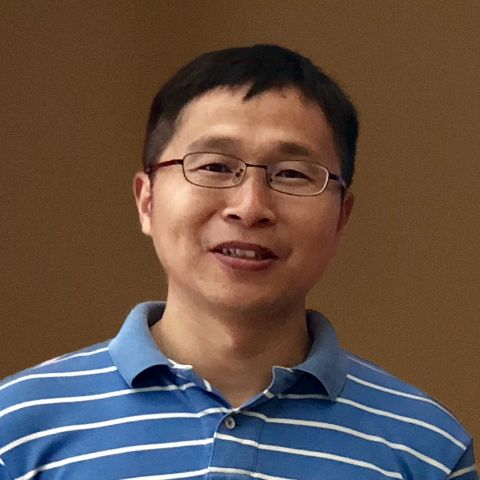

何中军

CCF TF算法与AI SIG主席,百度人工智能技术委员会主席

个人简介:长期从事机器翻译研究与开发,研发了全球首个互联网神经网络机器翻译系统及语义单元驱动的机器同传系统。曾获国家科技进步二等奖、中国电子学会科技进步一等奖、北京市科技进步一等奖、中国专利银奖等多项奖励。

段亦涛

网易有道首席科学家

个人简介:本科与硕士毕业于北京航空航天大学,于2007年获UC Berkeley计算机科学专业博士学位,研究方向包括大规模分布式计算,数据挖掘,机器学习,密码学以及安全和隐私。在博士期间加入有道,参与完成有道底层架构,目前任网易有道首席科学家,负责有道技术创新与相关实践工作。主要关注以深度学习为代表的最新AI技术在互联网各个领域的应用,包括机器翻译,图像识别等。主导了有道神经网络机器翻译YNMT等核心技术的研究和开发。

特邀讲者

王井东

百度计算机视觉首席科学家

主题:《Context Autoencoder for Scalable Self-Supervised Representation Pretraining》

主题简介:Self-supervised representation pretraining aims to learn an encoder from unlabeled images, such that the encoded representations take on semantics and benefit downstream tasks. In this talk, I present a novel masked image modeling approach, context autoencoder (CAE), for scalable self-supervised representation training. The core ideas include that predictions are made in the latent representation space from visible patches to masked patches and that the encoder is only for representation learning and representation learning is only by the encoder. I also discuss why masked image modeling potentially outperforms contrastive pretraining (e.g., SimCLR, MoCo) and why contrastive learning performs on par with supervised pretraining on ImageNet. In addition, I show that linear probing and the extended version, attentive probing, are more suitable than fine-tuning on ImageNet for pretraining evaluation.

个人简介:Jingdong Wang is a Chief Scientist for computer vision with Baidu. His team is focusing on conducting product-driven and cutting-edge computer vision/deep learning/AI research and developing practical computer vision applications. Before joining Baidu, he was a Senior Principal Researcher at Microsoft Research Asia. His areas of interest are computer vision, deep learning, and multimedia search. His representative works include deep high-resolution network (HRNet), discriminative regional feature integration (DRFI) for supervised saliency detection, neighborhood graph search (NGS, SPTAG) for large scale similarity search. He has been serving/served as an Associate Editor of IEEE TPAMI, IJCV, IEEE TMM, and IEEE TCSVT, and an area chair of leading conferences in vision, multimedia, and AI, such as CVPR, ICCV, ECCV, ACM MM, IJCAI, and AAAI. He was elected as an ACM Distinguished Member, a Fellow of IAPR, and a Fellow of IEEE, for his contributions to visual content understanding and retrieval.

肖斌

微软Cloud & AI计算机视觉研究组高级研究员

主题:《Florence: A New Foundation Model for Computer Vision》

主题简介:在多模态的大规模数据集上进行训练,通过少量的数据微调可以适应各种下游任务的计算机视觉基础模型,对于现实世界的计算机视觉应用至关重要。2021年底,微软发布Florenc基础模型,通过结合来自 Web的大规模图像 - 文本数据训练,可以轻松地适应各种计算机视觉任务,包括分类、检索、目标检测、视觉问答(VQA)、图像描述、视频检索和动作识别。模型发布时,在44个表征基准测试中多数都取得了新的SOTA结果,例如ImageNet-1K 零样本分类任务,top-1 准确率为85.7,ImageNet-1k微软后获得90.45 top-1准确率,COCO微调任务获得62.4 mAP,VQA任务获得80.36 mAP。

个人简介:现任微软Cloud & AI计算机视觉研究组高级研究员。主要研究方向为计算机视觉,大规模数据/语言多模态模型训练,物体检测/分割,人体姿态识别等。在CVPR/ECCV/ICCV/ICLR/AAAI等顶尖学术会议发表论文20余篇。他的多项研究技术成果已经开源并且应用到微软Azure等产品。

崔崟

Senior Research Scientist at Google

主题:《Label-Efficient Visual Perception via Multimodal Supervision and Distillation》

主题简介:In this talk, I will focus on two of our recent work (VATT and ViLD) towards building label-efficient computer vision models. In VATT, we learn multimodal representations from unlabeled raw video, audio and text using a unified Transformer encoder. In ViLD, we distill from pre-trained vision-language models such as CLIP to enable strong open-vocabulary detection using off-the-shelf Mask R-CNN.

个人简介:Yin Cui is a Senior Research Scientist at Google. Yin's research focuses on multimodal and label-efficient visual perception. Before joining Google, he received a Ph.D. in Computer Science from Cornell University in 2019, advised by Professor Serge Belongie. Yin also co-organized COCO Visual Recognition Workshops and Fine-Grained Visual Categorization Workshops at major computer vision conferences.

张磊

Chair Scientist of Computer Vision and Robotics at IDEA

个人简介:Lei Zhang is currently a Chair Scientist of Computer Vision and Robotics at International Digital Economy Academy(IDEA) and an Adjunct Professor of Hong Kong University of Science and Technology (Guangzhou). Prior to this, he was a Principal Researcher and Research Manager at Microsoft, where he has worked since 2001 in Microsoft Research Asia (MSRA), Microsoft Research(MSR, Redmond), and other computer vision-related product teams. He has led research teams for years, conducting research on computer vision with applications in large-scale image analysis, object detection, and vision-language understanding. His research has led to many practical impacts in Bing Multimedia Search and Microsoft Cognitive Services. He has published more than 150 papers in top conferences and journals and holds more than 60 US-granted patents. He was named as IEEE Fellow for his contribution in large-scale visual recognition and multimedia information retrieval.

屠卓文

Professor of Computer Science and Engineering, University of California San Diego

个人简介:Zhuowen Tu is a full professor of Cognitive Science and also affiliated with the Department of Computer Science and Engineering, University of California San Diego. Before joining UCSD in 2013 as an assistant professor, he was a faculty member at UCLA. Between 2011 and 2013, he took a leave to work at Microsoft Research Asia. He received his Ph.D. from the Ohio State University and his M.E. from Tsinghua University. He is a recipient of the David Marr Prize award 2003 and a recipient of the David Marr Prize Honorable Mention award 2015. He is a Fellow of the IEEE.

参会说明

1、如报名后无法参加,请及时于活动开始前发送邮件申请取消(联系邮箱:tf@ccf.org.cn),无故缺席将影响下一期活动的参与。

2、活动采用线上模式:腾讯会议。移动端可在微信小程序中搜索“腾讯会议”登录会议,或下载“腾讯会议”APP登录。客户端请搜索“腾讯会议”下载并登录。

3、会议号和密码将在活动当天通过邮件、短信通知,输入会议号和密码即可加入。

4、CCF会员免费参加,非会员99元/次,加入会员可免费参与全年47场活动。

会员权益

会员免费参加CCF TF全年47场活动,为自己的技术成长做一次好投资,用高性价比获取专业知识的绝佳路径!

长按识别或扫码入会

参会方式

长按识别或扫码报名

报名链接:https://conf.ccf.org.cn/TF58

联系方式

邮箱:tf@ccf.org.cn

电话:0512-83912127

手机:18912616058

CCF推荐

【精品文章】

返回首页

返回首页