学科前沿讲习班

学科前沿讲习班

The CCF Advanced Disciplines Lectures

CCF ADL 第79期

主题 存储器件与系统前沿

2017年6月14-16日 北京

大数据、云计算、物联网的快速发展对存储系统的设计和实现提出了新的挑战,而新型存储器件,如闪存固态盘、相变存储、3D XPoint等被寄予厚望,这对传统的存储体系结构产生了冲击, 迫切需要重新思考存储系统的设计理念和方法论。相关工作涉及器件设备、存储节点、核心软件、大规模存储系统等多方面的关键技术,相关讲座内容将促进我国学术界与工业界在新型存储技术的研究与发展。

本期CCF学科前沿讲习班《存储器件与系统前沿》邀请了多位学术界和工业界的著名学者,将从计算存储一体化、面向大数据的NVM存储系统、数据减缩机制、智能的DPU、内存计算、存储可靠性、层次化NVM存储和高效检索的存储系统等方面对国内外研究进展进行介绍,探讨相关技术的未来发展趋势。本讲习班旨在帮助学员了解存储器件与系统的当前热点和前沿的科学问题,开阔科研视野,增进学术交流和增强实践能力。

学术主任:舒继武 清华大学 、华 宇 华中科技大学

主办单位:中国计算机学会

独家合作媒体:雷锋网

日程安排

2017年6月14日

7:30-8:00 报到

8:00-8:15 开班仪式

8:15-10:15 学术专题讲座1

Accelerating Neural Network and Graph Processing with Memory-Centric Architecture

10:30-12:30 学术专题讲座2

高效检索的智能存储系统

12:30-13:30 午餐

13:30-15:30 学术讲座3

On the Performance and Dependability of Large-Scale SSD Storage Systems

16:00-18:00 学术专题讲座4

基于非易失存储器的存储系统软件层优化

2017年6月15日

8:00-10:00 学术专题讲座5

题目:Memory Centric Optimization: Keep the memory hierarchy but nothing else

10:30-12:30 学术专题讲座6

Introducing DPU - Data-storage Processing Unit –Placing Intelligence in Storage

杨庆,罗德岛大学 / Shenzhen Dapu Microelectronics Co. Ltd.

12:30-13:30 午餐

13:30-15:30 学术专题讲座7

Memory-Centric Architectures to Close the Gap Between Computing and Memory/Storage

16:00-18:00 学术专题讲座8

Data Reduction in The Era of Big Data: Challenges and Opportunities

2017年6月16日

08:00-10:00 学术专题讲座9

构建面向新技术和新兴应用的大数据时代下新型非易失性存储系统

10:30-12:30 学术专题讲座10

Scalable In-memory Computing: A Perspective from Systems Software

12:30-13:30 午餐

13:30-15:30 学术讨论

16:00-16:30 合影,结业式

(如有变动,以现场为准)

时间:2017年6月14-16日

地点:中科院计算技术研究所一层报告厅(海淀区科学院南路6号)

报名方式:即日起至2017年6月12日,报名请登录 https://jinshuju.net/f/d88XbY或扫描以下二维码报名

报名及缴费说明:

1、根据报名先后顺序录取,会员优先,报满为止,收到报名确认邮件后缴费;

2、 2017年6月12日(含)前注册并缴费:CCF会员2500元/人,非会员报名同时加入CCF 2700元/人;非会员3000元/人;现场缴费:会员、非会员均为4000元/人;CCF团体会员参加,按CCF会员标准缴费;

3、 请务必在报名表中填写CCF会员号,不填写会员号,按非会员处理;

4、 注册费包括讲课资料、视频资料和3天会议期间午餐,其他食宿、交通自理;

5、缴费后确因个人原因未参加者,扣除报名费15%(开具服务费发票);

6、 本期ADL给予边远地区高校两个免费名额,可免注册费,限CCF会员,需个人提出书面申请并加盖学校公章,将电子版发至adl@ccf.org.cn, CCF将按照申请顺序进行录取。

缴费方式:

银行转账(支持网银、支付宝):

开户行:北京银行北京大学支行

户名:中国计算机学会

账号:0109 0519 5001 2010 9702 028

请务必注明:姓名+ADL79

联系邮箱: adl@ccf.org.cn

电话: 010-62600321-16

附:特邀讲者及演讲摘要介绍

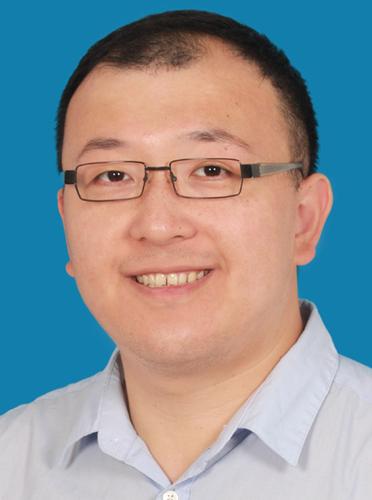

钱学海

南加州大学

Xuehai Qian is an assistant professor at the Ming Hsieh Department of Electrical Engineering and the Department of Computer Science at the University of Southern California. He has a Ph.D. from the Computer Science Department at University of Illinois at Urbana-Champaign. He has made contributions to parallel computer architecture, including cache coherence for atomic block execution, memory consistency check, architectural support for deterministic record and replay. His recent research interests include system/architectural supports for graph processing, transactions for Non-Volatile Memory and acceleration of machine learning and graph processing using emerging technologies.

讲座题目: Accelerating Neural Network and Graph Processing with Memory-Centric Architecture

摘要:In recent years, deep neural networks have been found to outperform other supervised learning algorithms on a number of important classification and regression tasks. Graph processing also received intensive interests in light of a wide range of needs to understand relationships. However, these applications pose great challenges to the traditional Von Neumann architecture. Neural network is both compute and memory intensive, a large amount of data movements are incurred due to the large number of layers and millions of weights. The graph applications are also memory bound with a large number of random accesses. The memory bandwidth bottleneck is incurred by a large number of random memory accesses during neighborhood traversal. This talk will discuss some recent works on accelerating neural network and graph processing using 3D-stacked DRAM (HMC) and emerging memory technology.

华 宇

华中科技大学

华宇,华中科技大学教授,博士生导师,CCF、ACM和IEEE高级会员,CCF杰出演讲者,CCF信息存储、高性能计算和体系结构专委委员。主要研究方向是新型存储器件和网络存储系统。在USENIX ATC、FAST、SC、INFOCOM、HPDC、ICDCS、MSST、DATE等国际学术会议和IEEE TC/TPDS/TII,ACM TACO,PIEEE等国际期刊上发表多篇学术论文。在ASPLOS (ERC)、USENIX ATC、EuroSys (Heavy Shadow)、RTSS、ICDCS、INFOCOM、IPDPS、ICNP、MSST、ICPP、LCTES等国际会议上担任程序委员,是国际期刊FCS和JCN的编委,应邀到美国阿贡国家实验室、橡树岭国家实验室、University of California, Riverside等学术机构做特邀报告,曾入选湖北省青年科技晨光计划,研究成果获得TST期刊年度最佳论文奖,中国电子学会电子信息科学技术二等奖。

讲座题目:高效检索的智能存储系统

摘要:数据检索的性能和有效性是提升整个存储系统性能的关键所在。新型存储系统需要管理和维护海量的文件、支持快速并发访问、具有高效的可扩展性。而海量数据具有数量巨大、种类异构、时效约束和价值稀疏等特点,这给面向非易失器件的存储系统的设计与实现带来了新的挑战。在讲座中,将全面分析目前的国内外相关研究工作提出的解决方法,并注重探讨面向数据内容和访问模式局部性的哈希方法,以及相关索引的构建机制和数据组织结构。同时,结合实际系统的实例,阐述在元数据管理、数据去重和NVM写模式分析等方面的应用方法。

李柏晴

香港中文大学

Patrick P. C. Lee received the B.Eng. degree (first-class honors) in Information Engineering from the Chinese University of Hong Kong in 2001, the M.Phil. degree in Computer Science and Engineering from the Chinese University of Hong Kong in 2003, and the Ph.D. degree in Computer Science from Columbia University in 2008. He was a postdoctoral researcher at University of Massachusetts, Amherst in 2008-2009. He is now an Associate Professor of the Department of Computer Science and Engineering at the Chinese University of Hong Kong. He currently heads the Applied Distributed Systems Lab and is working very closely with a group of graduate students on different projects in networks and systems. He was a collaborator with Alcatel-Lucent in developing network management solutions for 3G wireless networks in 2007-2011. His research interests are in various applied/systems topics including storage systems, distributed systems and networks, operating systems, dependability, and security.

讲座题目: On the Performance and Dependability of Large-Scale SSD Storage Systems

摘要:Solid-state drives (SSDs) have been widely deployed in desktops and large-scale data centers due to their high I/O performance and low energy consumption. However, there is a performance-dependability trade-off in the design space of SSDs. In this talk, we will study the design trade-off issues in large-scale SSDs from three aspects: performance, reliability, and durability. We review state-of-the-art studies on modeling, measurement, and system design. In particular, we study how parity-based RAID redundancy affects the performance-dependability trade-off of SSDs.

舒继武

清华大学

舒继武,博士,清华大学计算机系教授,教育部长江学者特聘教授,国家杰出青年基金获得者,中国计算机学会信息存储技术专业委员会副主任,灾备技术国家工程实验室副主任;担任《ACM

Transactions on Storage》的Associate

Editor和《计算机学报》、《软件学报》、《计算机研究与发展》等期刊编委;主要研究领域为网络(/云/大数据)存储系统、新型NVM存储系统与技术、存储可靠性与安全、并行/分布式处理技术等,相关成果发表在包括FAST、USENIX

ATC、MICRO、ISCA、EuroSys、DAC、DSN、IPDPS、MSST等重要国际学术会议和IEEE/ACM

Trans.等权威期刊上;获国家科技进步二等奖和国家发明技术二等奖各一次,部级科技一、二等奖三次。

讲座题目:基于非易失存储器的存储系统软件层优化

摘要:近年来,闪存技术逐渐成熟并得到广泛部署,且一些新型非易失存储器件如3D XPoint、PCM等也得到相当的发展。然而,闪存及其他新型非易失存储器件与传统的磁盘和DRAM都有着相当大的差异,例如在易失性、寿命、读写性能、寻址、存储密度等方面表现出不相同的特征。现有的存储系统软件层次均面向磁盘和DRAM设计,并不能充分发挥非易失存储器件的特性,甚至可能严重影响非易失存储器件的寿命与性能。本报告将分别阐述面向闪存的外存系统构建和面向字节寻址非易失内存系统构建中系统软件层次面临的相关问题、机遇与挑战,并分别阐述其现有存储系统软件层的一些研究进展,包括文件系统、IO路径优化、编程模型或接口以及分布式文件系统中的网络协议等方面。

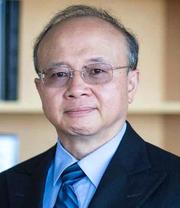

孙贤和

伊利诺伊理工学院

Dr. Xian-He Sun is a University Distinguished Professor of Computer Science of the Department of Computer Science at the Illinois Institute of Technology (IIT). He is the director of the Scalable Computing Software laboratory at IIT and a guest faculty in the Mathematics and Computer Science Division at the Argonne National Laboratory. Before joining IIT, he worked at DoE Ames National Laboratory, at ICASE, NASA Langley Research Center, at Louisiana State University, Baton Rouge, and was an ASEE fellow at Navy Research Laboratories. Dr. Sun is an IEEE fellow and is known for his memory-bounded speedup model, also called Sun-Ni’s Law, for scalable computing. His research interests include data-intensive high performance computing, memory and I/O systems, software system for big data applications, and performance evaluation and optimization. He has over 250 publications and 5 patents in these areas. He is a former IEEE CS distinguished speaker, a former vice chair of the IEEE Technical Committee on Scalable Computing, the past chair of the Computer Science Department at IIT, and is serving and served on the editorial board of leading professional journals in the field of parallel processing. More information about Dr. Sun can be found at his web site www.cs.iit.edu/~sun/.

讲座题目:Memory Centric Optimization: Keep the memory hierarchy but nothing else

摘要:Computing has changed from compute-centric to data-centric. Many new architectures, such as GPU, FPGA, ASIC, are introduced to match computer systems with the applications’ data requirement, and therefore, improve the overall performance. In this talk we introduce a series of fundamental results and their associated mechanisms to conduct this matching automatically, and through both hardware and software optimizations. We first present the Concurrent-AMAT (C-AMAT) data access model to unify the impact of data locality, concurrency and overlapping. Then, we introduce the pace matching data-transfer design methodology to optimize memory system performance. Based on the pace matching design, a memory hierarchy is built to mask the performance gap between CPU and memory devices. C-AMAT is used to calculate the data transfer request/supply ratio at each memory layer, and a global control algorithm, named layered performance matching (LPM), is developed to match the data transfer at each memory layer and thus match the overall performance between the CPU and the underlying memory system. The holistic pace-matching optimization is very different from the conventional locality-based system optimization. Analytic results show the pace-matching approach can minimize memory-wall effects. Experimental testing confirms the theoretical findings, with a 150x reduction of memory stall time. We will present the concept of the pace matching data transfer, the design of C-AMAT and LPM, and some experimental case studies. We will also discuss optimization and research issues related to pace matching data transfer and of memory systems in general.

杨庆

罗德岛大学

Shenzhen Dapu Microelectronics Co. Ltd.

杨庆,美国罗德岛大学讲席教授,IEEE Fellow,从事计算机体系结构和数据存储方面的研究20多年,发表论文120多篇,拥有20多项授权专利,基于其研究成果,他创立了四家高科技企业,最近的一家:VeloBit,以最新的闪存固态硬盘体系结构而闻名,被西部数据高价收购。他作为主席主持过多次计算机结构方面的国际会议包括ISCA2011,曾任IEEE计算机体系结构分会会员主席,IEEE杰出演讲者,IEEE Transaction编委。八次获得杰出教授奖。杨庆培养的很多博士生在美国著名大学任教、或在高科技公司工作包括Intel Fellow等等。

讲座题目:Introducing DPU - Data-storage Processing Unit –Placing Intelligence in Storage

摘要: Cloud computing and big data applications require data storage systems that deliver high performance reliably and securely. The central piece, the brain, of a storage system is the central controller that manages the storage. However, all existing storage controllers have their limitations. As the data become larger, more storage technologies emerge, and applications spread wider, the existing controllers cannot keep pace with the rapid growth of big data. We introduce and are currently building a storage controller with built in intelligence, referred to as DPU for Data-storage Processing Unit, to manage, control, analyze, and classify big data at the place where they are stored. The idea is to place sufficient intelligence closest to the storage devices that are experiencing revolutionary changes with the emergence of storage class memories such as flash, PCM, MRAM, Memristor and so forth. Machine learning logics are a major part of DPU that learns I/O behaviors inside the storage to optimize performance, reliability, and availability. Advanced security techniques are implemented inside a storage device. Deep learning techniques train and analyze big data inside a storage device and reinforcement learning optimizes storage hierarchy. Parallel and pipelining techniques are utilized to process stored data exploiting the inherent parallelism inside SSD. Our preliminary experimental data showed promising results that could potentially change the landscape of storage market.

谢源

加州大学圣芭芭拉分校

Yuan Xie is a professor at UC Santa Barbara (UCSB). He received Ph.D. from Princeton University, and then joined IBM Microelectronics as advisory engineer. From 2003 to 2014 he was with Penn State as Assistant/Associate/Full professor. He also worked with AMD research between 2012-2013. His research interests include EDA/architecture/VLSI, and has published more than 200 papers in IEEE/ACM venues. He was elevated to IEEE Fellow for contributions in design automation and architecture for 3D ICs. He is currently the Editor-in-Chief for ACM Journal of Emerging Technologies in Computing Systems.

讲座题目:Memory-Centric Architectures to Close the Gap Between Computing and Memory/Storage

摘要: Traditional computer systems usually follow the so-called classic Von Neumann architecture, with separated processing units (such as CPUs and GPUs) to do computing and separated memory units for data storage. The increasing gap between the computing of processor and the memory has created the “memory wall” problem in which the data movement between the processing units (PUs) and the data storage is becoming the bottleneck of the entire computing system, ranging from cloud servers to end-user devices. As we enter the era of big data, many emerging data-intensive workloads become pervasive and mandate very high bandwidth and heavy data movement between the computing units and the memory/storage. This talk will discuss the design opportunities and challenges for memory-centric architecture, which is proposed to close the gap between computing and data storage, with the emerging data-intensive applications such as neural computing and graph analytics as application drivers to guide the architecture optimization.

江泓

德州大学阿灵顿分校

Hong Jiang received the B.Sc. degree in Computer Engineering in 1982 from Huazhong University of Science and Technology, Wuhan, China; the M.A.Sc. degree in Computer Engineering in 1987 from the University of Toronto, Toronto, Canada; and the PhD degree in Computer Science in 1991 from the Texas A&M University, College Station, Texas, USA. He is currently Chair and Wendell H. Nedderman Endowed Professor of Computer Science and Engineering Department at the University of Texas at Arlington. Prior to joining UTA, he served as a Program Director at National Science Foundation (2013.1-2015.8) and he was at University of Nebraska-Lincoln since 1991, where he was Willa Cather Professor of Computer Science and Engineering. His present research interests include computer architecture, computer storage systems and parallel I/O, high-performance computing, big data computing, cloud computing, performance evaluation. He has graduated 16 Ph.D. students who now work in either major IT companies or academia. He has over 200 publications in major journals and international Conferences in these areas. Dr. Jiang is a Fellow of IEEE, and Member of ACM.

讲座题目: Data Reduction in The Era of Big Data: Challenges and Opportunities

摘要: We are living a rapidly changing digital world where we are inundated by an ocean of data that is being generated at a rate of 2.5 billion GB every single day! It is projected that by 2017 humans will have generated 16 trillion GB digital data. This phenomenal growth and ubiquity of data has ushered in an era of “Big Data”, which brings with it new challenges as well as opportunities. In this talk, I will first discuss big data challenges facing and opportunities for computer and storage systems research, with an emphasis on challenges and research questions brought on by the almost unfathomable volumes of data, namely, research questions dealing with data reduction in face of big data. I will then present some recent solutions proposed by my research group that seek to address the performance and space issues facing big data and data-intensive applications by means of data reduction.

李涛

佛罗里达大学

李涛,博士,美国佛罗里达大学工程学院电子与计算机工程系教授,智能计算机体系结构设计实验室主任。 2004年于美国德克萨斯大学奥斯汀分校获得计算机工程博士学位。2013年获Yahoo!重大研究计划挑战奖。2009年获美国国家科学基金会杰出青年教授奖(NSF CAREER Award)。2008年,2007年,2006年均获 IBM 学院奖(IBM Faculty Award)。2008年获得美国微软研究院安全及可扩展多核计算机奖。2006年获得微软研究院可信计算课程研究奖。2012, 2014两度获佛罗里达大学工程学院年度最佳博士生论文导师奖。在高性能计算机体系结构、 高效/可靠/低功耗微处理器及存储系统、面向云计算和大数据数据中心、虚拟化、并行与分布式计算、新型及可重构计算架构、面向特定应用计算架构、多核容错处理器、片上互连网络、面向多众核的可扩展体系架构、新型前瞻技术及应用对硬件和操作系统的影响、嵌入式与片上系统、以及计算机系统性能评估等诸多领域取得了多项开创性成果。在著名的国际期刊(大部分为 IEEE/ACM 期刊)和计算机体系结构类一级国际会议 ISCA、 MICRO、HPCA、ALPLOS、 SIGMETRICS、 PACT、 DSN发表论文120余篇,同时还获得10多项美国及中国发明专利。其中9篇论文被HPCA’17、ICCD’16、ICPP’15、CGO’14、HPCA’11、DSN’11、MICRO’08、IISWC’07 和 MASCOTS’06会议程序委员会推荐参选“最佳论文奖”。获ICCD’16、HPCA’11最佳论文以及IEEE Computer Architecture Letters 2015度最佳论文。

讲座题目:构建面向新技术和新兴应用的大数据时代下新型非易失性存储系统

摘要:Data storage systems and architecture play a vital role in the evolution of society’s information technology. This foundation, which was laid several decades ago, is facing grand challenges today as power limits and reduced semiconductor reliability make it increasingly more difficult to leverage technology scaling, and the shift towards cloud computing, virtualization, and big data analytics will include dependability and availability requirements for traditional architectures. At the same time, there are emerging technologies and applications that can be game-changing enablers in the future. A new plethora of memory and storage architectures can be built by exploiting innovative concepts, mechanisms and implementations that take advantages of these emerging technologies and applications while addressing new design challenges in big data era. In this short lecture, I will address the opportunities and challenges of innovating next generation non-volatile memory storage architecture in big data era from a holistic perspective spanning from device technology to emerging application.

陈海波

上海交通大学

陈海波,上海交通大学教授、博士生导师,CCF杰出会员、杰出演讲者,ACM/IEEE高级会员。主要研究方向为系统软件与系统结构。入选2014年国家“万人计划”青年拔尖人才计划,获得2011年全国优秀博士学位论文奖、2015年CCF青年科学家奖。目前担任ACM SOSP 2017年大会主席、ACM APSys指导委员会主席、《软件学报》责任编辑、《ACM Transactions on Storage》编委等。多次担任SOSP、ISCA、Oakland、PPoPP、EuroSys、Usenix ATC、FAST等国际著名学术会议程序委员会委员。在SOSP、OSDI、EuroSys、Usenix ATC、ISCA、MICRO、HPCA、FAST、PPoPP、CCS、Usenix Security等著名学术会议与IEEE TC、TSE与TPDS等著名学术期刊等共发表60余篇学术论文,获得ACM EuroSys 2015、ACM APSys 2013与IEEE ICPP 2007的最佳论文奖与IEEE HPCA 2014的最佳论文提名奖。研究工作也获得Google Faculty Research Award、IBM X10 Innovation Award、NetAPP Faculty Fellowship与华为创新价值成果奖等企业奖励。

讲座题目:Scalable In-memory Computing: A Perspective from Systems Software

摘要: In-memory computing promises 1000X faster data access speed, which brings opportunities to boost transaction processing speed into a higher level. In this talk, I will describe our recent research efforts in providing speedy in-memory transactions at the scale of millions of transactions per second. Specifically, I will present how we leverage advanced hardware features like HTM, RDMA to provide both better single-node and distributed in-memory transactions and query processing, how operating systems and processor architecture could be refined to further ease and improve in-memory transaction processing, as well as how concurrency control protocol can be adapted accordingly to fit our need.

返回首页

返回首页